Seeing Ghosts: Self-driving Cars Aren't Immune From Hackers

Autonomous vehicles “feel” the road ahead with a variety of sensors, with data received sent through the vehicle’s brain to stimulate a response. Brake action, for example. It’s technology that’s far from perfected, yet self-driving trials continue on America’s streets, growing in number as companies chase that elusive driver-free buck.

In one tragic case, a tech company (that’s since had a come-to-Jesus moment regarding public safety) decided to dumb down its fleet’s responsiveness to cut down on “false positives” — perceived obstacles that would send the vehicle screeching to a stop, despite the obstacle only being a windblown plastic bag — with fatal implications. On the other side of the coin, Tesla drivers continue to plow into the backs and sides of large trucks that their Level 2 self-driving technology failed to register.

Because all things can be hacked, researchers now say there’s a way to trick autonomous vehicles into seeing what’s not there.

If manufacturing ghosts is your bag, read this piece in The Conversation. It details work performed the RobustNet Research Group at the University of Michigan, describing how an EV’s most sophisticated piece of tech, LiDAR, can be fooled into thinking it’s about to collide with a stationary object that doesn’t exist.

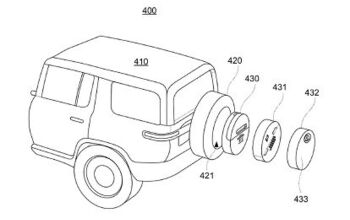

LiDAR sends out pulses of light, thousands per second, then measures how long it takes for those signals to bounce back to the sender, much like sonar or radar. This allows a vehicle to paint a picture of the world around it. Camera systems and ultrasonic sensors, which you’ll find on many new driver-assist-equipped models, complete the sensor suite.

From The Conversation:

The problem is these pulses can be spoofed. To fool the sensor, an attacker can shine his or her own light signal at the sensor. That’s all you need to get the sensor mixed up.

However, it’s more difficult to spoof the LiDAR sensor to “see” a “vehicle” that isn’t there. To succeed, the attacker needs to precisely time the signals shot at the victim LiDAR. This has to happen at the nanosecond level, since the signals travel at the speed of light. Small differences will stand out when the LiDAR is calculating the distance using the measured time-of-flight.

If an attacker successfully fools the LiDAR sensor, it then also has to trick the machine learning model. Work done at the OpenAI research lab shows that machine learning models are vulnerable to specially crafted signals or inputs – what are known as adversarial examples. For example, specially generated stickers on traffic signs can fool camera-based perception.

The research group claims that spoofed signals designed specifically to dupe this machine learning model are possible. “The LiDAR sensor will feed the hacker’s fake signals to the machine learning model, which will recognize them as an obstacle.”

Were this to happen, an autonomous vehicle would slam to a halt, with the potential for following vehicles to slam into it. On a fast-moving freeway, you can imagine the carnage resulting from a panic stop in the center lane.

The team tested two possible light pulse attack scenarios using a common autonomous drive system; one with a vehicle in motion, the other with a vehicle stopped at a red light. In the first setup, the vehicle braked, while the other remained immobile at the stoplight.

Needless fear mongering? Not in this case. With the advent of new technology, especially one that exists in a hazy regulatory environment, there will be people who seek to exploit the tech’s weaknesses. The team said it hopes “to trigger an alarm for teams building autonomous technologies.”

“Research into new types of security problems in the autonomous driving systems is just beginning, and we hope to uncover more possible problems before they can be exploited out on the road by bad actors,” the researchers wrote.

[Image: SAE, Ford]

More by Steph Willems

Latest Car Reviews

Read moreLatest Product Reviews

Read moreRecent Comments

- Statikboy I see only old Preludes in red. And a concept in white.Pretty sure this is going to end up being simply a Civic coupe. Maybe a slightly shorter wheelbase or wider track than the sedan, but mechanically identical to the Civic in Touring and/or Si trims.

- SCE to AUX With these items under the pros:[list][*]It's quick, though it seems to take the powertrain a second to get sorted when you go from cruising to tromping on it.[/*][*]The powertrain transitions are mostly smooth, though occasionally harsh.[/*][/list]I'd much rather go electric or pure ICE I hate herky-jerky hybrid drivetrains.The list of cons is pretty damning for a new vehicle. Who is buying these things?

- Jrhurren Nissan is in a sad state of affairs. Even the Z mentioned, nice though it is, will get passed over 3 times by better vehicles in the category. And that’s pretty much the story of Nissan right now. Zero of their vehicles are competitive in the segment. The only people I know who drive them are company cars that were “take it or leave it”.

- Jrhurren I rented a RAV for a 12 day vacation with lots of driving. I walked away from the experience pretty unimpressed. Count me in with Team Honda. Never had a bad one yet

- ToolGuy I don't deserve a vehicle like this.

Comments

Join the conversation

I live in Boston. There is no need to outsource the study of unpredictable and poorly trained drivers to China.

Aside from anyone deliberately trying to mess with an 'autonomous' vehicle there's EMI to consider. With more and more underground cables EMI is pervasive. Sure you can sheild a system. IIRC in the early days of Electronic Fuel Injection, high powered 2-way radios could cause havoc and engine shut down. I installed a few anti-static kits with grounding cables from hoods and trunks to body. As late as the 80s some Bosch systems malfunctioned due to static discharges under very specific conditions. A fix was created with a filter in a cable leading to the ECM. As another post has it,"you need to test the crap out of it"