BMW Rep: Government May Never Allow for Autonomous Cars, Computerized Life and Death Decisions

BMW’s past promises include a pledge to help keep drivers driving in the brave new world of autonomous vehicles. However, it hasn’t entirely sworn off self-driving technology. The company finds itself in a tricky spot, as it’s seen as both a luxury automaker and a performance brand. But it can’t claim to be “The Ultimate Driving Machine” if it doesn’t allow customers to drive.

Automakers and tech firms pushed relentlessly for autonomous driving, making claims that a self-driving nirvana was just around the corner. But current technology proved less than perfect in practice and modern autonomous vehicles require constant human involvement to operate safely, just like any normal car. Despite making strides, the industry seems torn on how to appease everyone.

The government is even more in the dark. While lawmakers initially agreed with industry rhetoric (that autonomy will save lives and usher in a new era of mobility), recent events sparked skepticism. There aren’t many new regulations appearing in the United States, but there also isn’t any clear legislation to help decide who’s held liable when the cars malfunction. A lot of what if questions remain unanswered.

BMW thinks this will be the main reason why autonomous cars fail.

It’s surprising to hear an automaker says this. The industry seems hell-bent on ramming this technology down our collective throats, consequences be damned. But Ian Robertson, BMW’s special representative in the United Kingdom, says that government regulations will probably stop autonomous features before they can become normalized.

“I think governments will actually say ‘okay, autonomous can go this far,'” he told AutoExpress. “It won’t be too long before government says, or regulators say, that in all circumstances it will not be allowed.”

Roberson said programing a car to make decisions between one life and another is extremely difficult and involves too many moral implications. “Even though the car is more than capable of taking an algorithm to make the choice, I don’t think we’re ever going to be faced where a car will make the choice between that death and another death.”

Meanwhile, Mercedes-Benz says it will always have its autonomous vehicles prioritize the life of the driver in the event of a crash. It’s an interesting problem; one the Massachusetts Institute of Technology has been working on by allowing people to take an ethics-based quiz that forces decisions in a no-win scenario. The test, called Moral Machine, collects data on how people feel autonomous development should progress. It also reveals the problems associated with giving a self-driving car a difficult decision when it comes to who lives and who dies.

BMW isn’t leading the charge in terms of autonomous development, though it does operate several fleets of self-driving vehicles. It’s actively developing the technology. But Robertson knows it can’t make its way to market until it’s objectively error-free.

“..the technology is not mature right now,” he said, “The measure of success is how many times the engineer has to get involved. And we’re currently sitting at around three times [every 1,000km].” While Robertson admitted that sounded promising, he said it was still unacceptable. “It has to be perfect,” he concluded.

Reaching perfection takes time and, even though semi-autonomous systems (like Tesla’s Autopilot) proved impressive, fatal crashes involving that system heightened scrutiny and grew skepticism. Self-driving cars have to operate virtually error-free to gain public acceptance. Pulling that off requires more work and maybe even a complete redesign of our transportation infrastructure — as well as the rules that govern it.

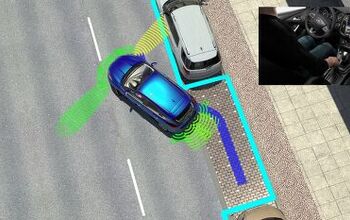

[Image: BMW]

A staunch consumer advocate tracking industry trends and regulation. Before joining TTAC, Matt spent a decade working for marketing and research firms based in NYC. Clients included several of the world’s largest automakers, global tire brands, and aftermarket part suppliers. Dissatisfied with the corporate world and resentful of having to wear suits everyday, he pivoted to writing about cars. Since then, that man has become an ardent supporter of the right-to-repair movement, been interviewed on the auto industry by national radio broadcasts, driven more rental cars than anyone ever should, participated in amateur rallying events, and received the requisite minimum training as sanctioned by the SCCA. Handy with a wrench, Matt grew up surrounded by Detroit auto workers and managed to get a pizza delivery job before he was legally eligible. He later found himself driving box trucks through Manhattan, guaranteeing future sympathy for actual truckers. He continues to conduct research pertaining to the automotive sector as an independent contractor and has since moved back to his native Michigan, closer to where the cars are born. A contrarian, Matt claims to prefer understeer — stating that front and all-wheel drive vehicles cater best to his driving style.

More by Matt Posky

Latest Car Reviews

Read moreLatest Product Reviews

Read moreRecent Comments

- MaintenanceCosts Can I have the hybrid powertrains and packaging of the RAV4 Hybrid or Prime with the interior materials, design, and build quality of the Mazda?

- ToolGuy I have 2 podcasts to listen to before commenting, stop rushing my homework.

- ToolGuy Please allow me to listen to the podcast before commenting. (This is the way my mind works, please forgive me.)

- ToolGuy My ancient sedan (19 years lol) matches the turbo Mazda 0-60 (on paper) while delivering better highway fuel economy, so let's just say I don't see a compelling reason to 'upgrade' and by the way HOW HAVE ICE POWERTRAIN ENGINEERS BEEN SPENDING THEIR TIME never mind I think I know. 😉

- FreedMike This was the Official Affluent-Mom Character Mobile in just about every TV show and movie in the Aughts.

Comments

Join the conversation

Duh! The bigger the vehicle, the more difficult it is to have it maneuver autonomously through dense city traffic. Reason why buses will never become self-driving, unless they have their own lane. Why is that? The bigger the vehicle, the more road space it occupies, the smaller the margin to evade other road users. Automakers use self-driving as a luxury feature on their more expensive, therefore bigger cars. Smart cars are the first to make self-driveable.

I say we only allow Tesla to make cars that drive while their owners die.